Forward:

This guide is for environments where Exchange On-Premise 2013/2016/2019 is configured as a hybrid deployment with Microsoft 365 Exchange Online.

Requirements:

It assumes you have an administrator mailbox account that can login to both Exchange On-Premise as an Exchange Administrator and login to Microsoft Exchange 365 Online as a Global Admin.

You will also require an active, functional mailbox sitting in the mailbox database located in the Exchange On-Premise. This mailbox does not need to have any administrative rights, it doesn’t even have to be in use actively by a user, it just needs to exist for testing purposes.

The Exchange On-Premise needs to be externally accessible on ports: 25, 80, and 443.

Prerequisite Checks:

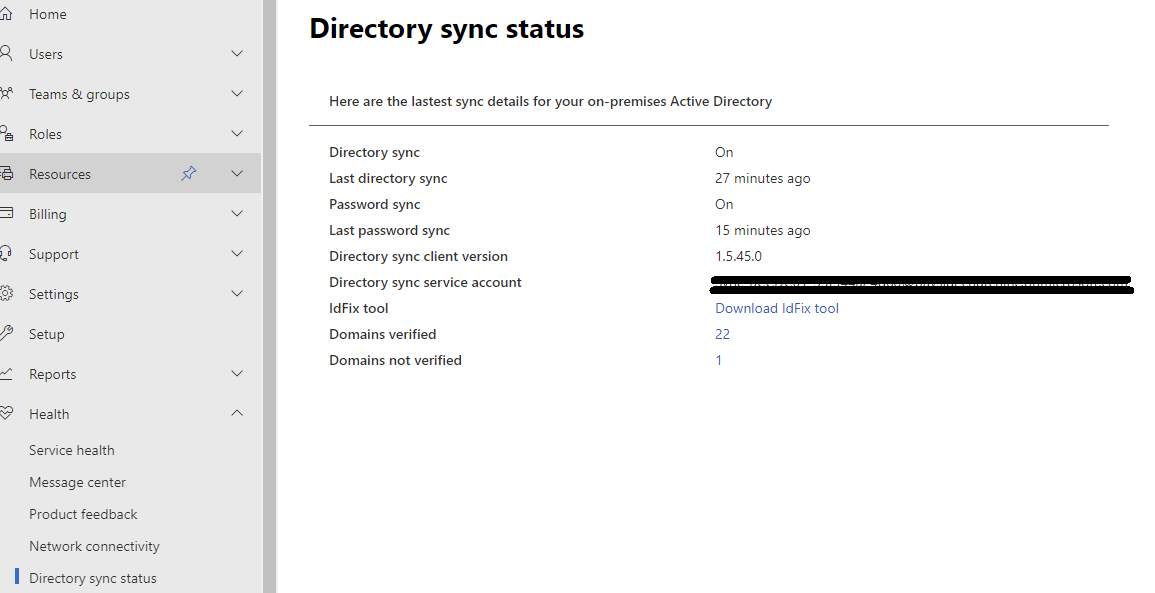

First, we need to check the health of the AD-Sync deployment. In the Microsoft 365 Admin Center, head to Health > Directory sync status

Check that Directory sync is on & healthy, no errors, and make sure password sync is also working.

Ideally, recent syncs should be less that 40 minutes.

If AD Sync isn’t working properly, address this problem before continuing any further.

Microsoft has recently split off support for Microsoft Windows Server 2012 R2, so this version of Windows is less likely to receive any updates. If you’re in this situation, you may need to download Azure AD Connect version 1.6.16.0. More on the Azure AD Connect version history here. Please note, that as of August 2022, all Azure AD Connect versions 1.x.x.x will be retired as they use Microsoft SQL Server 2012 which will no longer be supported.

If you’re in an environment still operating on Windows Server 2012 R2, now is the time to start planning an upgrade – even if that upgrade means deploying a more modern version of Windows Server (2016/2019/2022) as a domain member or secondary domain controller, and configuring Azure AD Sync on this newer server instead.

Certificate Installation on On-Premise Exchange server:

You will need to already have your new certificate file(s) – ideally, you want the full stack certificate file in pfx format.

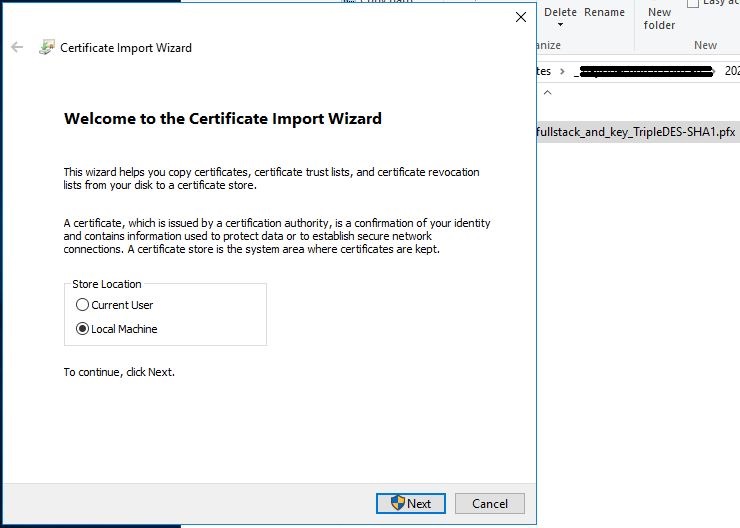

Login to your Exchange server’s desktop environment as a domain admin, copy the P12 PFX file to somewhere local on the Exchange Server.

Double-click the certificate file to launch the certificate installation wizard

Select Local Machine and click Next

Confirm by clicking Yes if you are prompted with the UAC elevation prompt

On the next screen, just click next

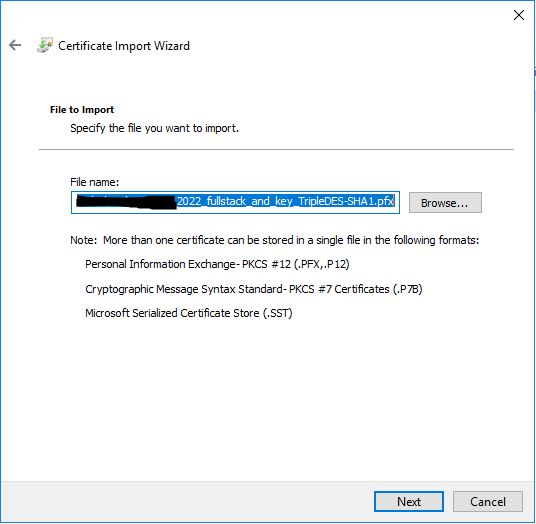

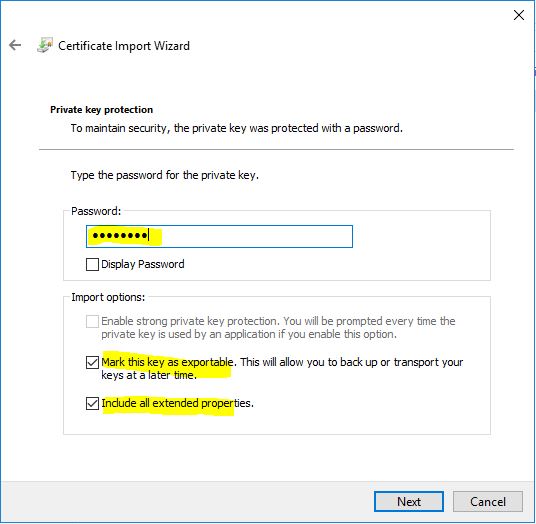

This next step is pretty crucial especially, if later you need to export the certificate for use elsewhere…

Enter in the password for the PFX file.

Ensure that both options are ticked for:

- Mark this key as exportable

- Include all extended properties

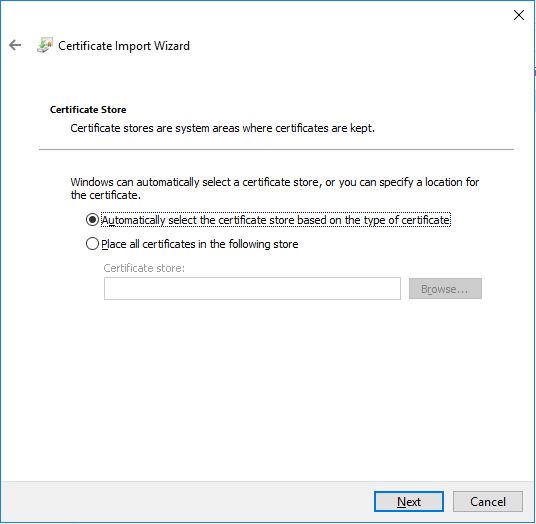

Now, if the certificate is properly formatted, the Automatic store selection should just work fine here. If not, select the Personal certificate store.

Now we need to check the certificate in the Certificates snap in for the local machine and ensure we give it a meaningful name

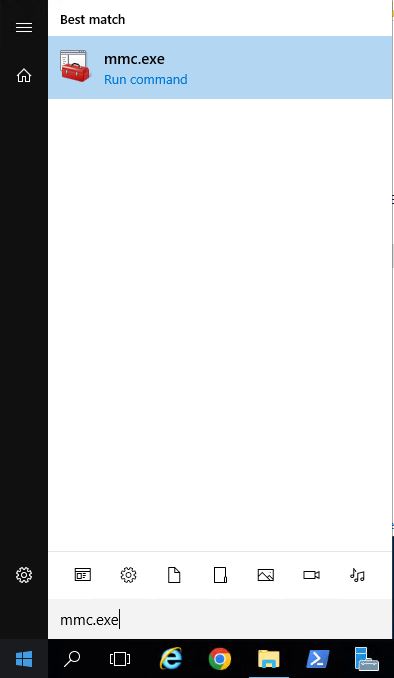

Click start, type in mmc.exe – once its listed, press enter (note: if UAC is on, you will be prompted to click Yes again).

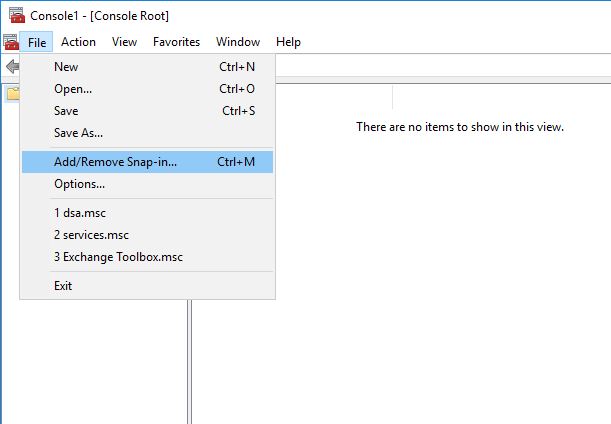

Click File > Add/Remove Snap-in…

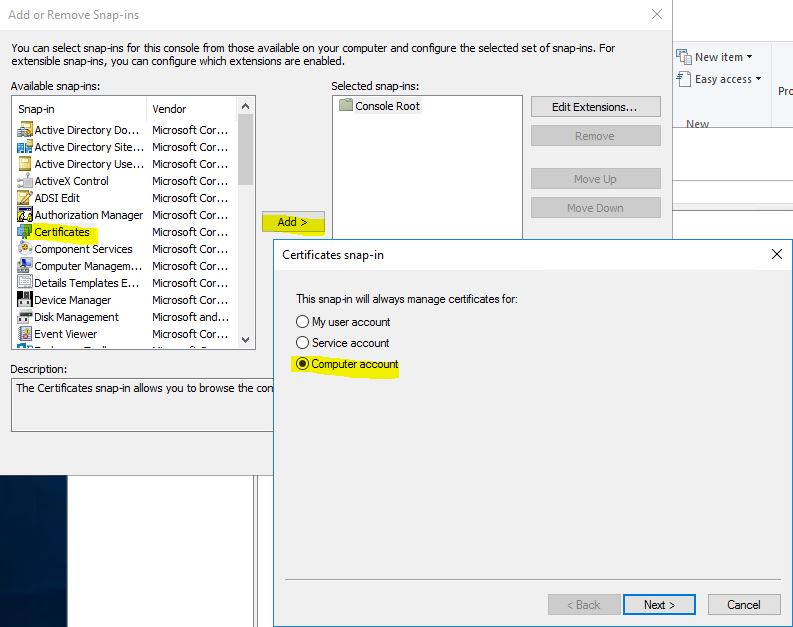

In the left box, choose Certificates, click the Add button

It will prompt you to select what certificates to manage – select Computer account and click Next

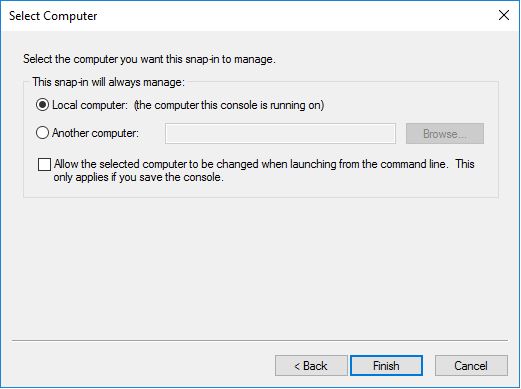

Select Local computer and click Finish

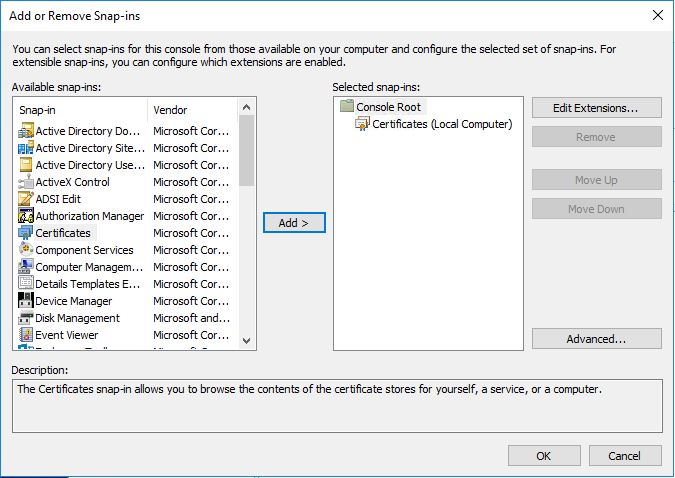

Click OK

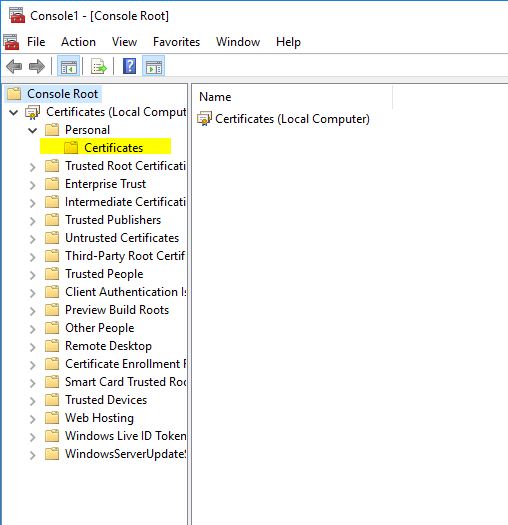

Expand out Certificates > Personal > Certificates

Click on the Certificates folder you revealed under Personal

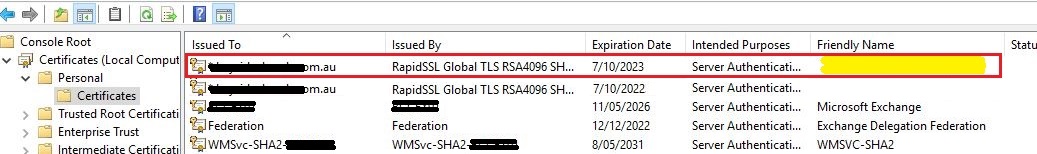

You will be presented with all the certificates

You will see both the expiring / expired certificate and the newly installed certificate. Note, neither of these have a ‘friedly name’ – we’re going to fix this now.

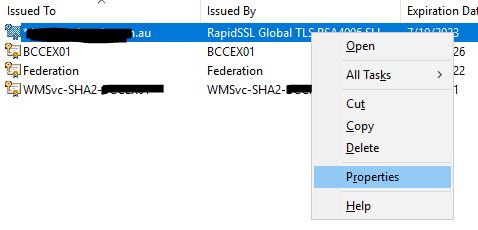

Right-click on the newly installed certificate and click Properties

In the properties window, give the certificate a friendly name – this is very helpful to identify this certificate when there are several with the same SAN. Ideally, I like to use the FQDN_YYYY at the very least which indicates the fully qualified domain name, and underscore, and the year the certificate was installed. Some techs will use the installation date or the expiry date. If you do this, maybe also add in the words installed or expires so the next time this is revisited, the date is more meaningful.

Once you have the name, click OK.

Update Bindings in IIS:

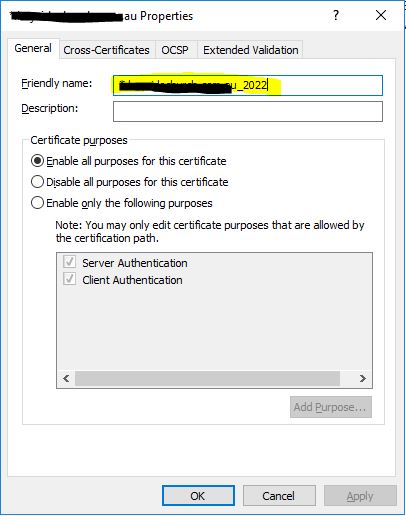

Now the friendly name filed in the certificates list will have the name you entered against that certificate. Go ahead and close the MMC certificates console.

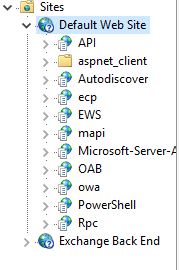

Next we need to launch the IIS interface and expand out all the sites.

First click on the Default Web Site and expand it – this will likely have all the front end facing sites & services.

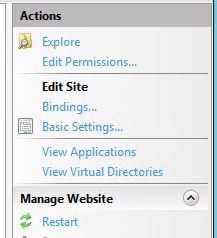

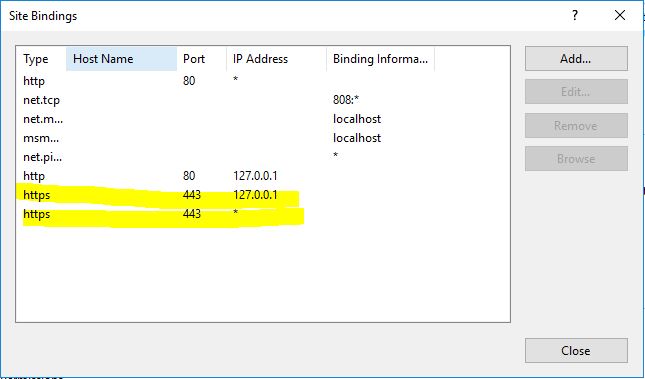

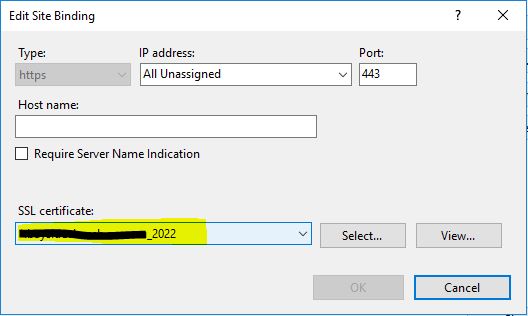

Now on the right side under the Actions menu, click Bindings…

Any of the bindings in here that are on https need to have the new certificate applied

Double-click and set the new certificate for each one

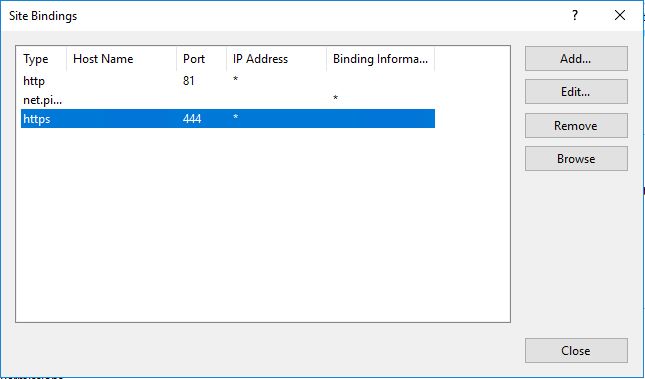

Once these are done, move onto the Exchange Back End pool

Again, on the right, click to edit the Bindings in the Actions menu

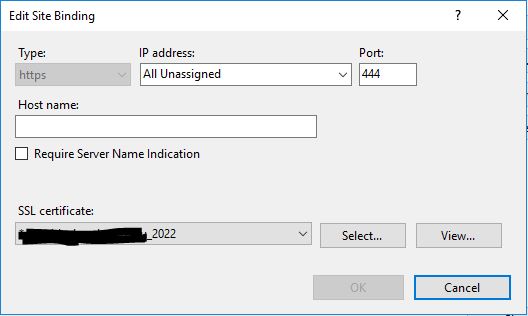

There’s usually only a single binding on https and uses port 444.

Note: This is almost always meant to use the default “Microsoft Exchange” certificate issued by the Exchange server, so don’t change this unless it for some reason already has the expiring signed SSL certificate or is required to have a 3rd party certificate!

Again, edit this binding to use the new certificate

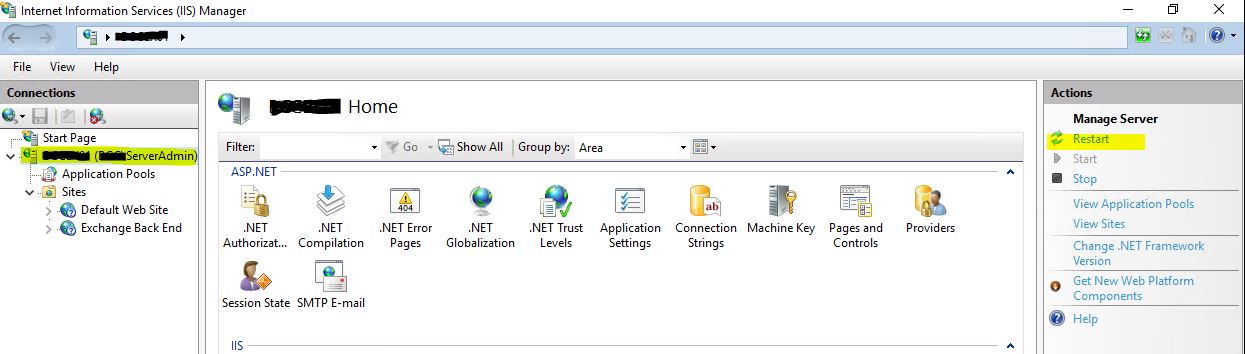

Once the bindings have all been updated, you may need to restart IIS – note this will disconnect any connected clients.

In the tree on the left, click on the server, then on the right under the Actions menu, click Restart – this may take a minute

Update Connectors in Exchange:

Login to the Exchange ECP Web UI with the administrator account.

While you’re at it, open another tab in the same browser and use this other tab to login to the Microsoft 365 Admin Center – using the same account credentials.

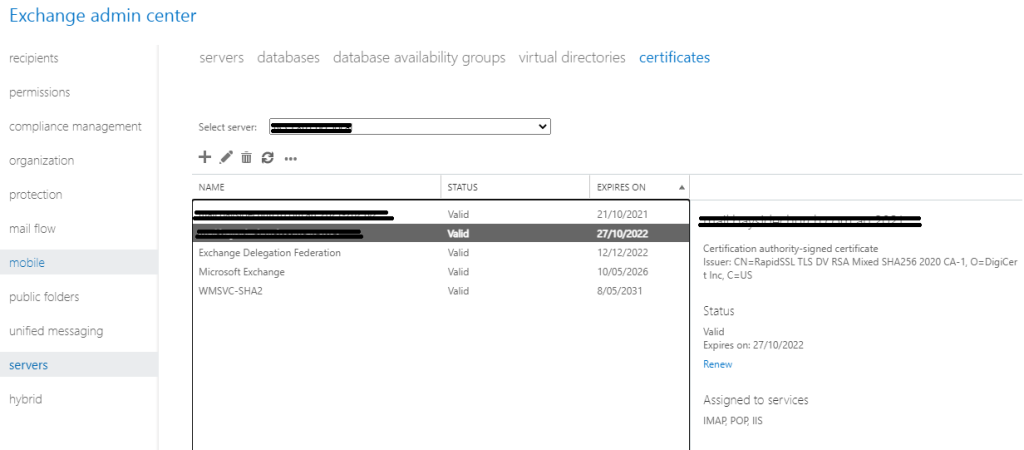

In the On-Premise Exchange ECP, head to Servers > Certificates

Double-click the new certificate

Locate the Thumbprint, copy and paste this into a notepad session (you’ll need this shortly)

You will need to select Services and then tick box:

- SMTP

- IMAP (optional, but not available for wildcard certificates)

- POP (optional, but not available for wildcard certificates)

- IIS

(Note that IMAP and POP are optional, but recommended to choose them if clients are connecting using these protocols)

Click Save

You may receive a Warning prompt about overwriting the existing default SMTP certificate, Choose Yes.

Update Default Send and Receive Connectors in Exchange On-Premise PowerShell:

Launch an Exchange PowerShell for the On-Premise Exchange server

Issue the command:

Get-ExchangeCertificate

This will list all of the installed SSL Certificates on the Exchange server

Note, the Thumbprints for each will be listed – confirm your new SSL Certificate’s Thumbprint should be listed there as well.

Let’s place the Thumbprint into the PowerShell session’s environment variables

$cert = Get-ExchangeCertificate -Thumbprint XXXXXX $tlscertificatename = "<i>$($cert.Issuer)<s>$($cert.Subject)"

(The second line will be used a little further down)

Let’s enable secure SMTP using the new certificate:

Enable-ExchangeCertificate $cert -services SMTP

Note: Due to recent Exchange updates, if you get an error similar to the below running this, change the command to:

Enable-ExchangeCertificate <thumbprint> -services SMTP

and it should work

Now let’s get the Send Connectors list and update the connector with the new certificate

Get-SendConnector

This will list all the send connectors, locate the connector used to connect with Office 365 – it will look something like:

"Outbound to Office 365"

Let’s set this connector to use the new certificate:

Set-SendConnector "Outbound to Office 365" -TlsCertificateName $tlscertificatename

Repeat for any other send connectors that are in use

Next, we need to do the same for the Receive Connector(s)

Issue the command:

Get-ReceiveConnector

to get the list of all the receive connectors

Identify which connector(s) are using secure protocols for incoming connection (incl. from Office 365). Eg:

<ExchServer>\Default Frontend <ExchServer> <ExchServer>\Client Frontend <ExchServer> <ExchServer>\Client Proxy <ExchServer>

Where <ExchServer> is the local host name of the Exchange server.

You may have multiple receive connectors that require updating, so the below will need to apply to these as well.

Set-ReceiveConnector "<ExchServer>\Default Frontend <ExchServer>" -TlsCertificateName $tlscertificatename

Update the Office 365 receove connector as well.

You can now delete the expiring SSL certificate from the Exchange server (via IIS or Certificate manager).

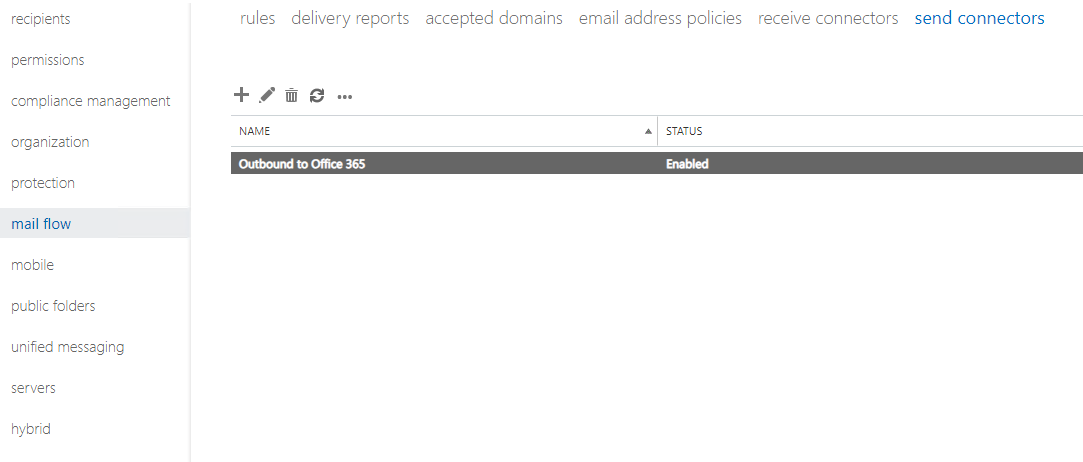

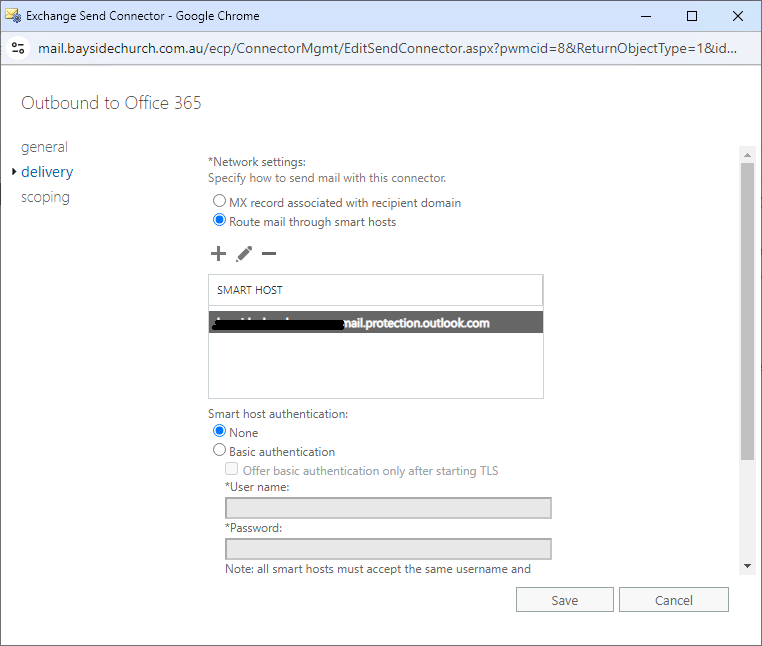

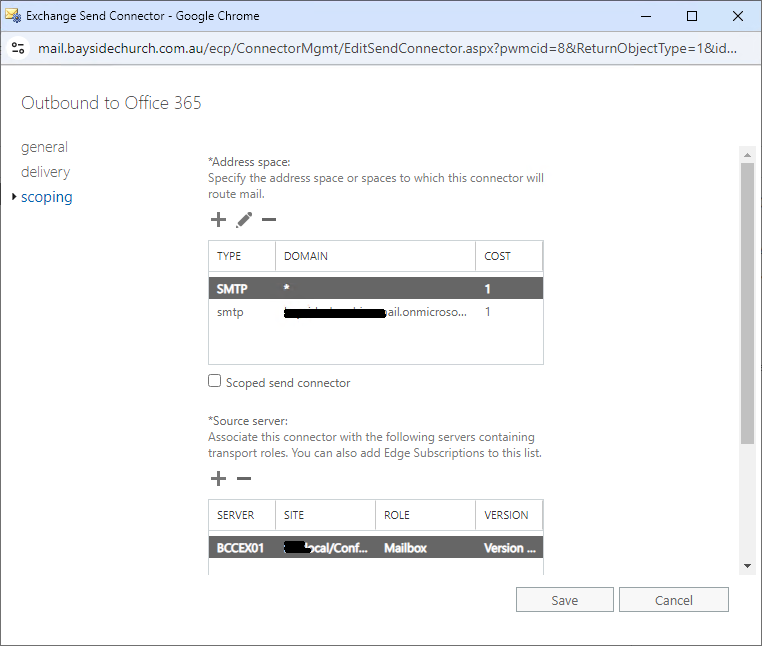

Head back to Exchange ECP > Mail Flow > Send Connectors

Edit the Send Connector used by Office 365 to note down the following settings:

Edit the Send Connector used by Office 365 to note down the following settings:

Delivery > mail routing (MX or Smart Host)

Scoping > Address Space

Again, note these settings down as the Hybrid Configuration Wizard will overwrite them and mail break some mail flow.

Re-Validate the Hybrid Configuration:

The below process has been completely re-written as the process has changed extensively. Migrations are no longer performed on the on-premise Exchange server.

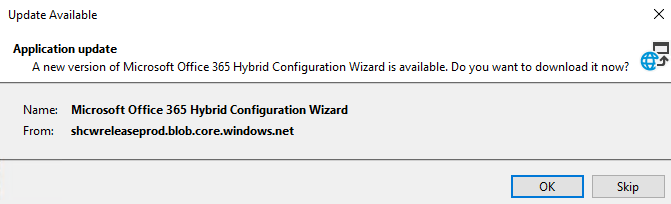

From the desktop of the Exchange server launch the Microsoft Office 365 Hybrid Configuration Wizard:

Its likely an update will be offered, so please proceed with the update installation

Once all updated and installed, click Next at the Welcome screen

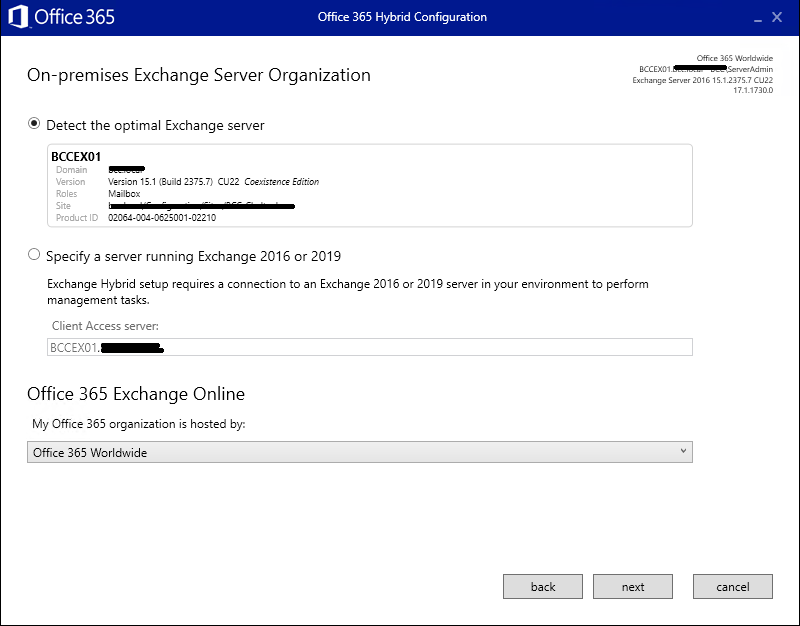

Wait for the wizard to perform its initial detection task – once done, it should show the correct Exchange server and have Office 365 Worldwide selected. Click Next

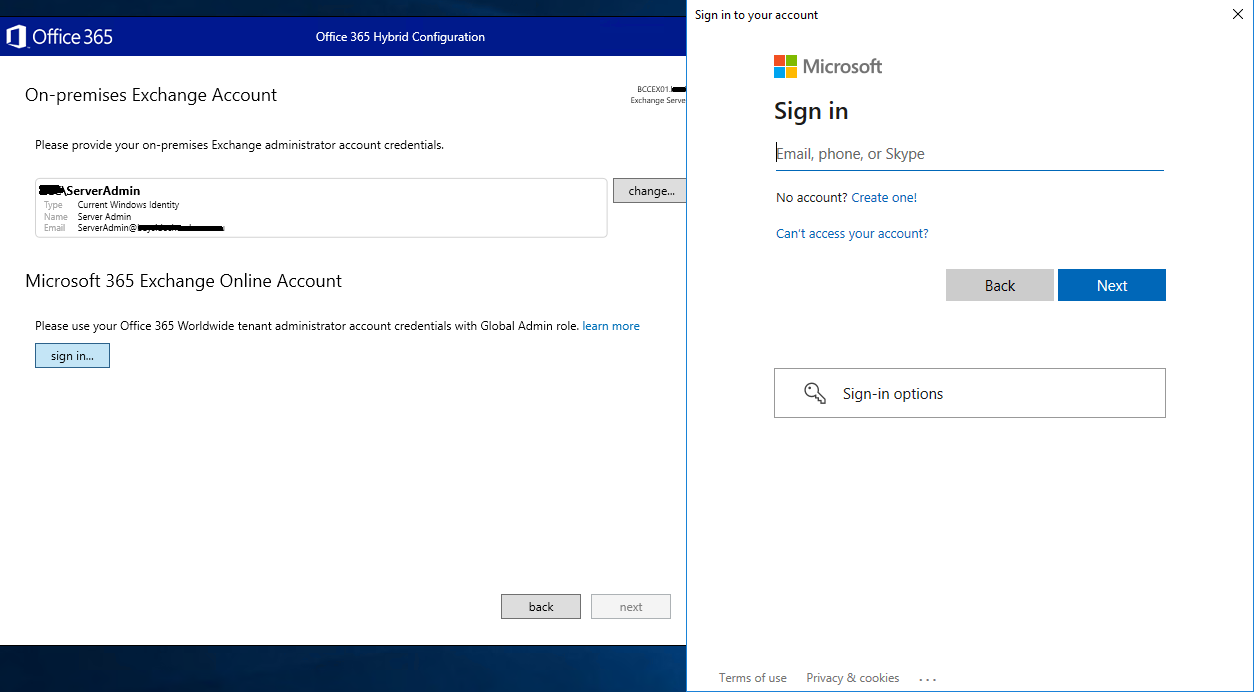

Ensure a domain admin account has been auto selected for the on-premise Exchange server.

Click Sign in for the 365 tenant admin account and authenticate as usual

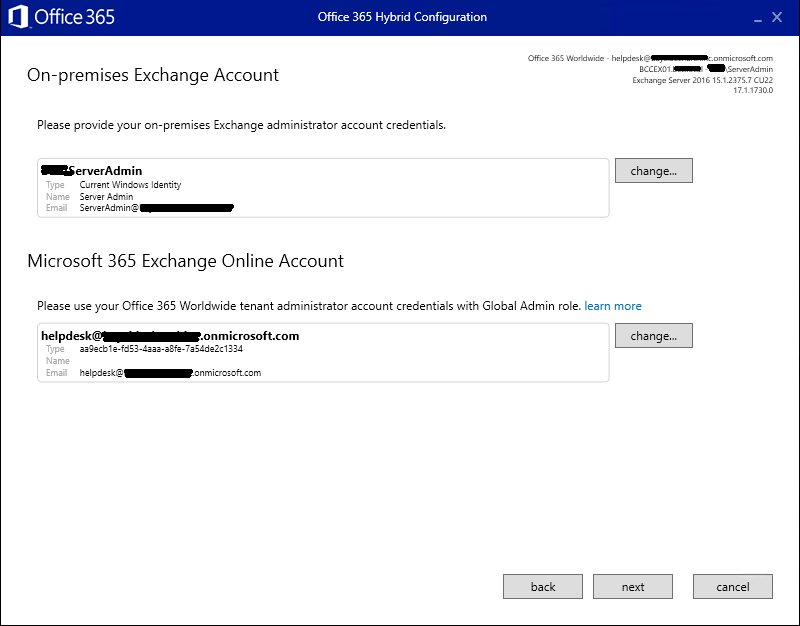

Once signed in for on-prem and 365, it should look like the below – click Next

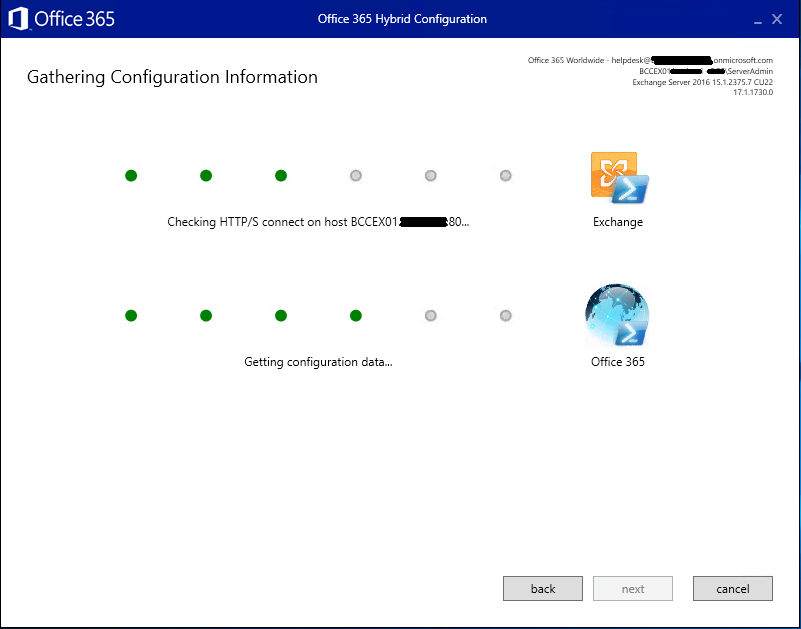

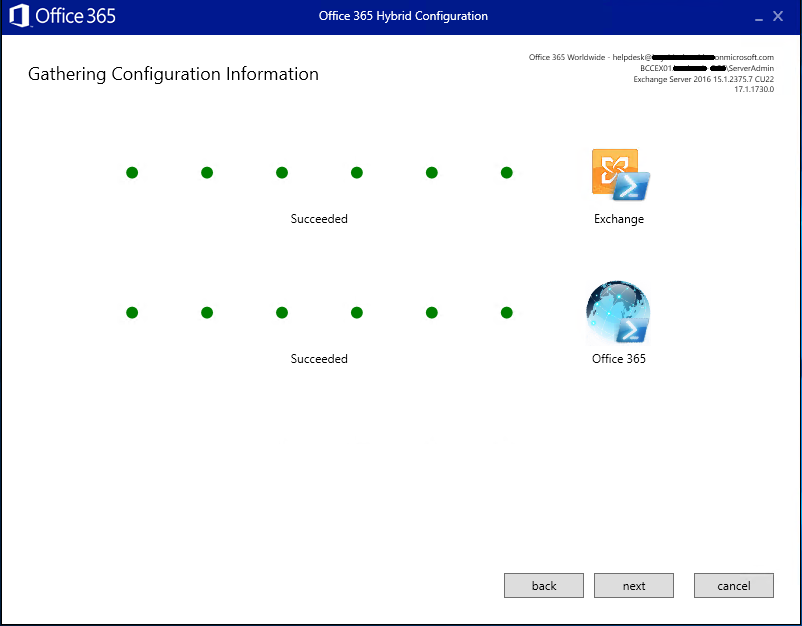

The wizard will spend some time ‘gathering information’

Sometimes issues do crop up here, usually if the configuration is broken of very old, or if certain parts of Exchange aren’t working properly.

You’ll need to spend some time addressing the concerns raised before you get a successful result on both on-prem and 365:

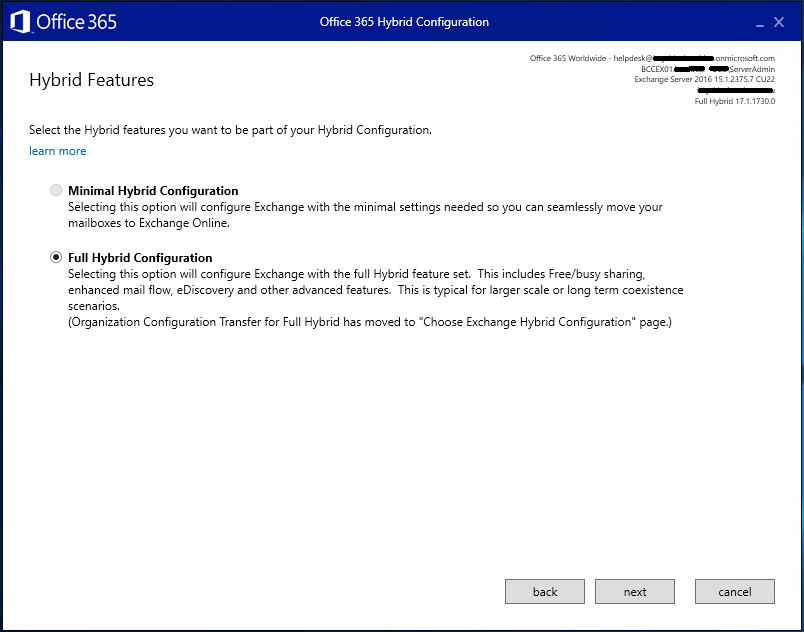

Its likely that Full Hybrid Configuration will be selected, and Minimal Hybrid is greyed out – this is fine

In the event that Minimal is selected, discuss with the lead tech for this client to clarify this is correct as usually we setup Full Hybrid Configuration.

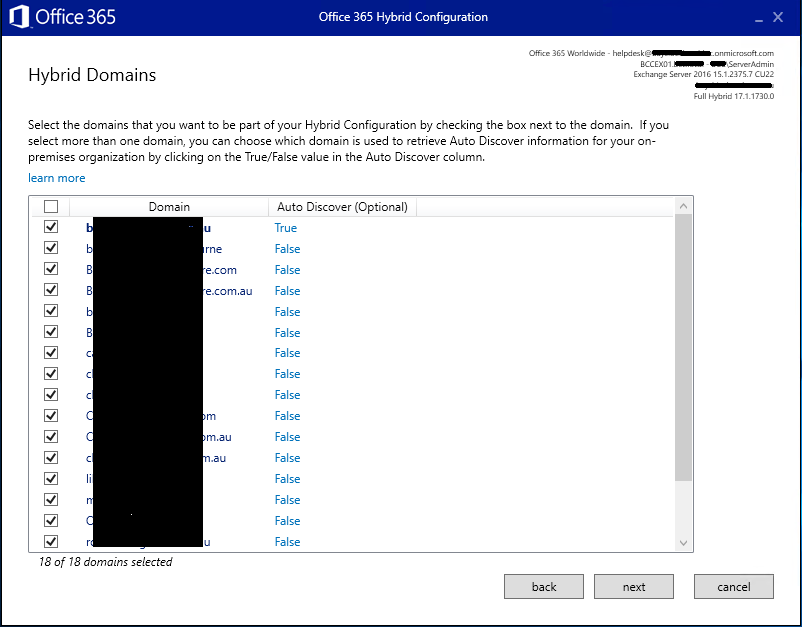

The next screen will present the domain names present and selected.

If unsure, discuss with the lead tech, but in most cases, all domains will be selected

(some clients have way too many domain names)

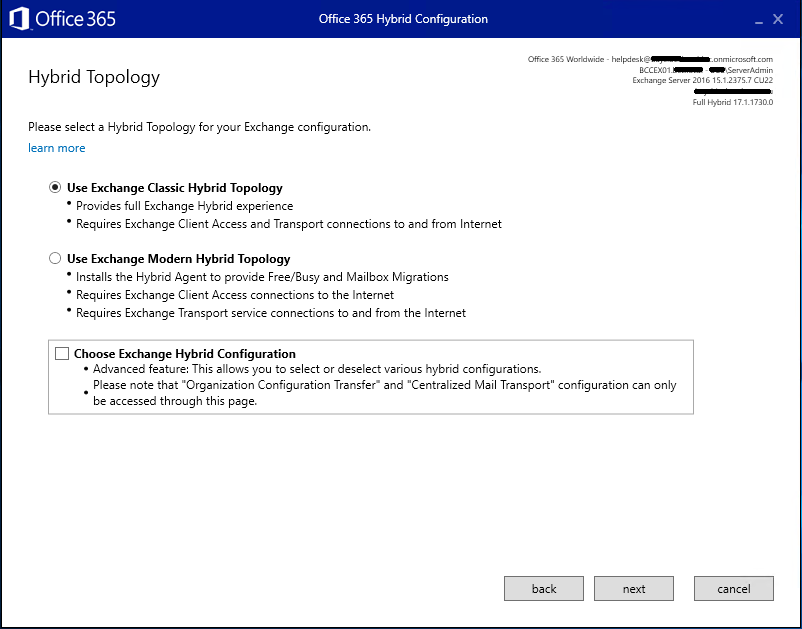

At this time, where Hybrid Sync is configured with Class Hybrid Technology we’re still using this, but in the future we’ll likely migrate to Modern Hybrid Technology (likely when pushed by Microsoft to do so)

Click Next

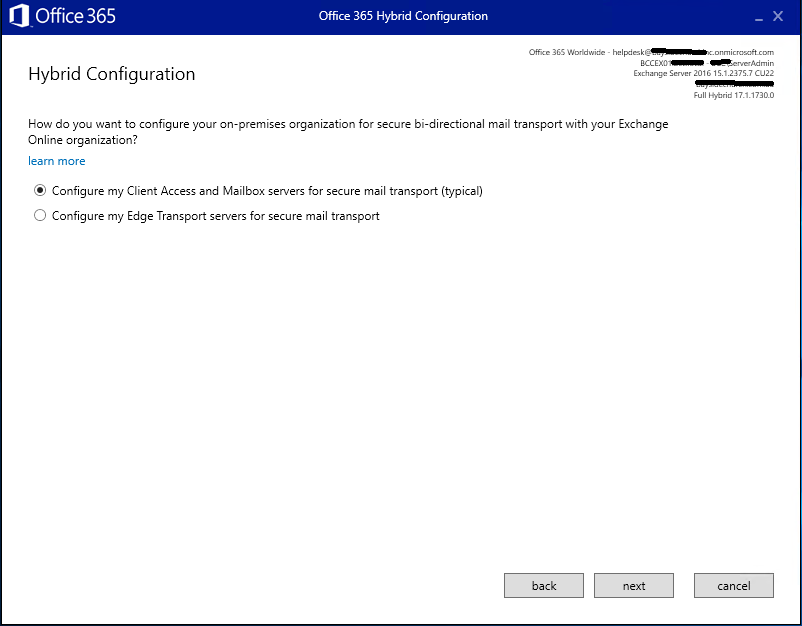

This next screen will usually be smart enough to figure out if the on-premise Exchange server is using CAS/MBX or EDGE connector roles – but be sure to double check – especially with larger Exchange deployments

The next two screens just asks you to confirm which Exchange server to use for hosting Receive Connectors and Send Connectors – 99.99% of the time, same on-premise Exchange server, so click Next for each

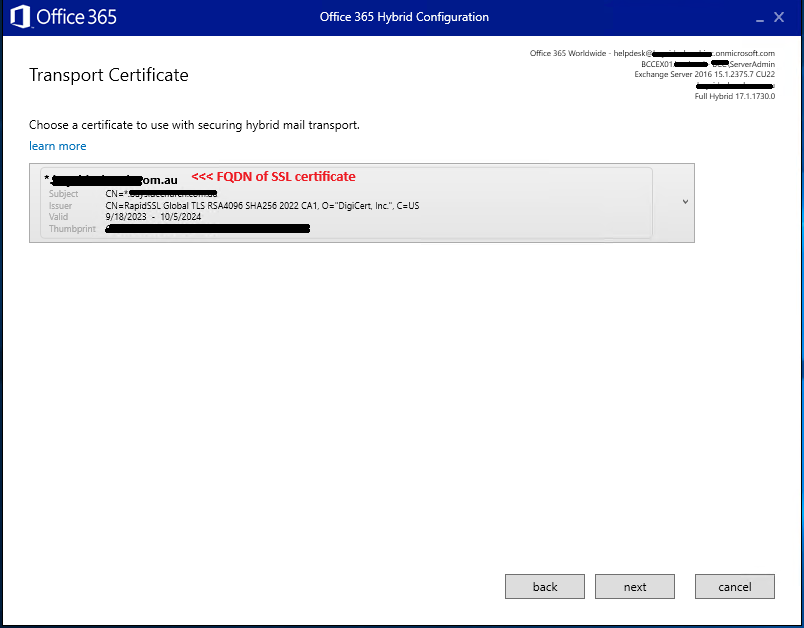

After this you will be asked to choose and confirm the correct SSL certificate to be used for communication between Exchange on-prem and Office 365 – ideally, this is the same SSL certificate used on the Exchange for the Send & Receive connectors and Web Front Ends as installed earlier in the guide. Ensure the current SSL has been auto selected and no old / expired signed certificates exist – if they do, you need to stop and fix this up before re-running the Hybrid Configuration Wizard!

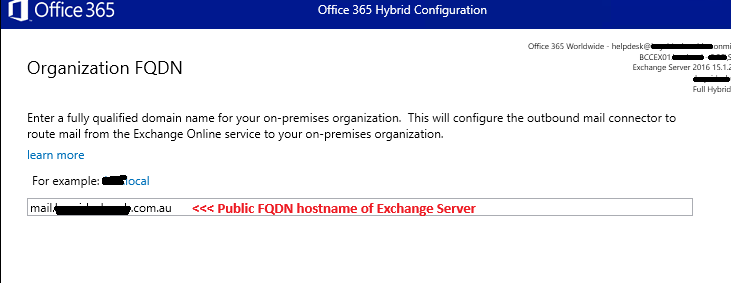

The next screen just confirms the public FQDN on the on-premise Exchange server as configured on the connectors – click Next if correct (365 will connect using this)

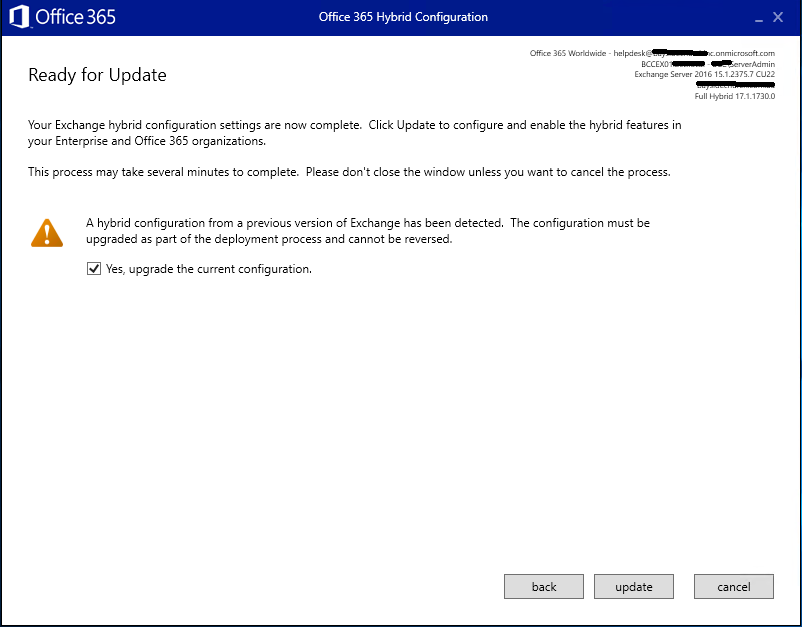

The wizard is now at the final stage and is ready to update the configuration, so tick Yes to upgrade and click Next

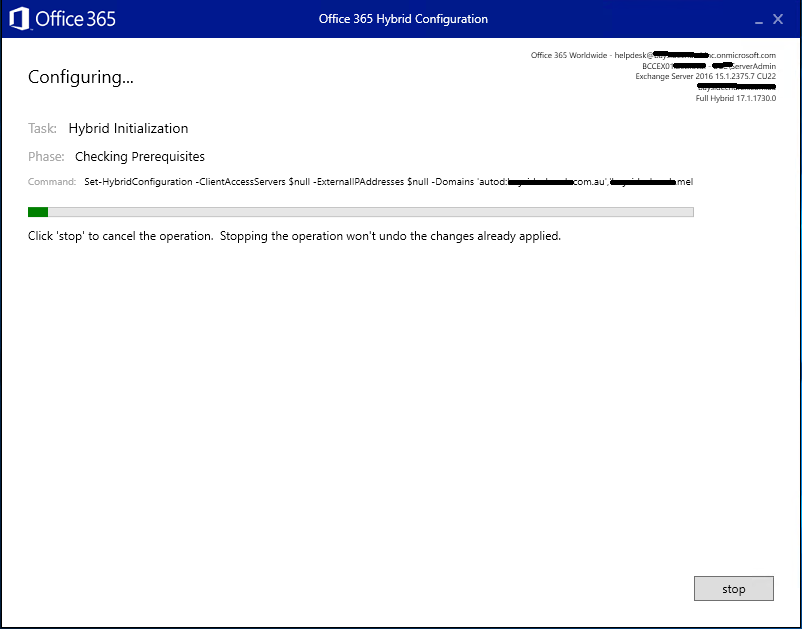

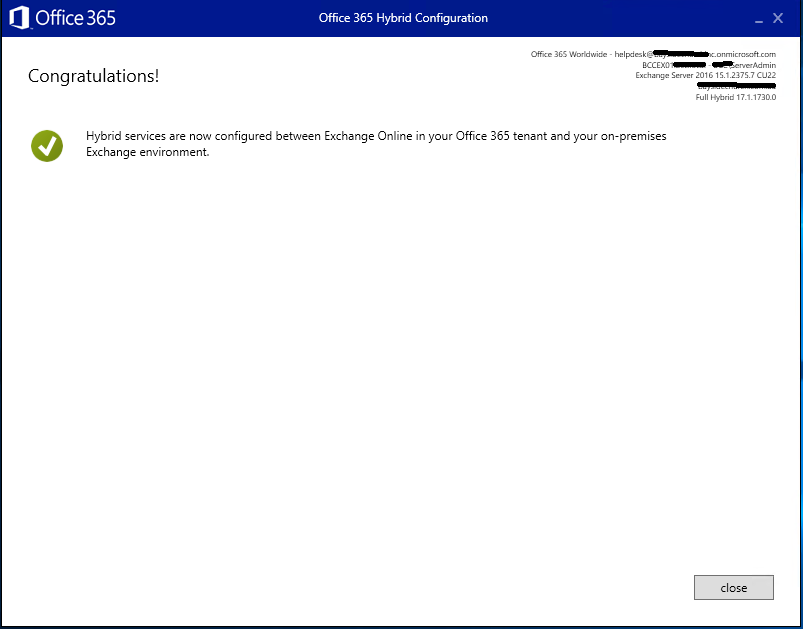

The process should only take up to 5 mins on a relatively standard & healthy environment (running Exchange 2016 or 2019)

At the end of the wizard, you should be presented with a Congratulations screen with the welcoming green tick, click Close.

Now return to Exchange ECP > Mail Flow > Send Connectors

Edit the send connector for Office 365

Using the settings you noted down earlier, adjust the connector back to what you have noted down.

Don’t go away, we’re almost done, but not yet… Now we need to test & confirm its working properly…

Test Hybrid Exchange Configuration

To test we need two things – an on-premise Exchange mailbox and to be logged into the admin as the tenant admin

The on-premise mailbox needs to be fully generated – one that has been logged into and has at least one mail item in its mailbox

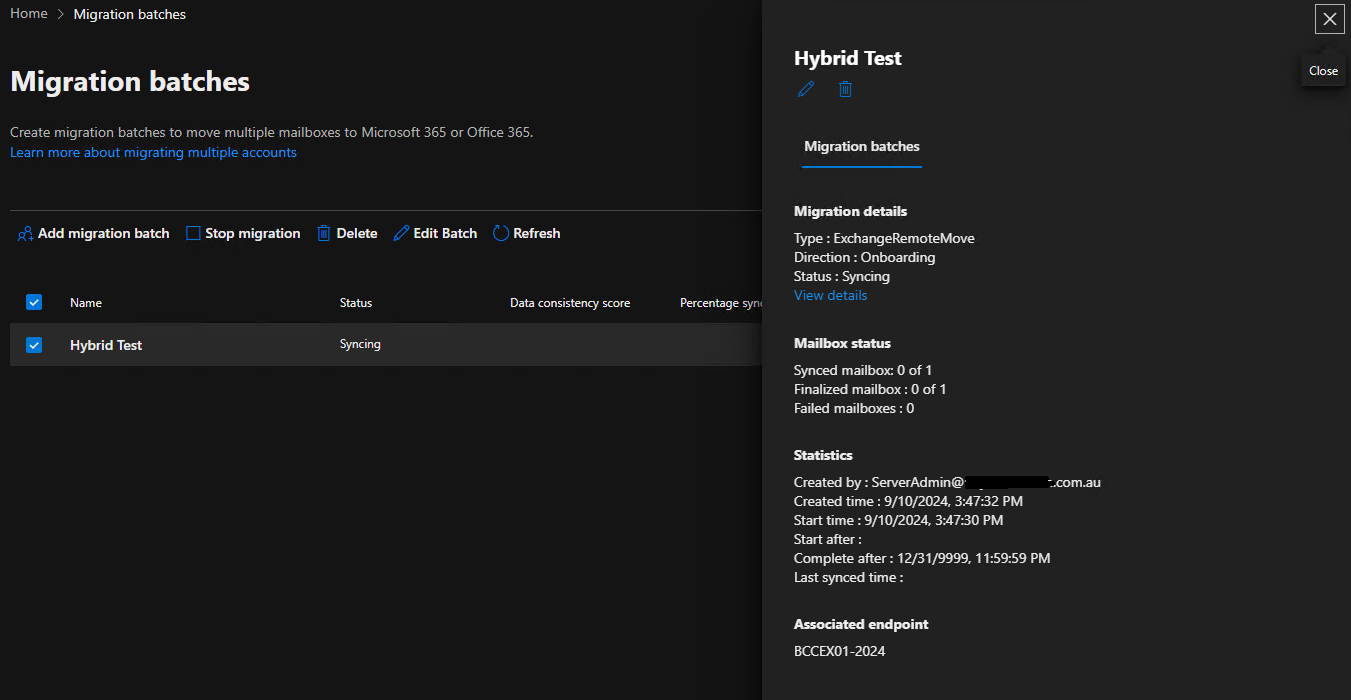

In the Admin Center, head to Exchange > Migration https://admin.exchange.microsoft.com/#/migrationbatch

In the upper right corner, click on Endpoints and ensure the on-premise Exchange server is present and looks correct. If not, delete it – we can re-add it during the next steps below…

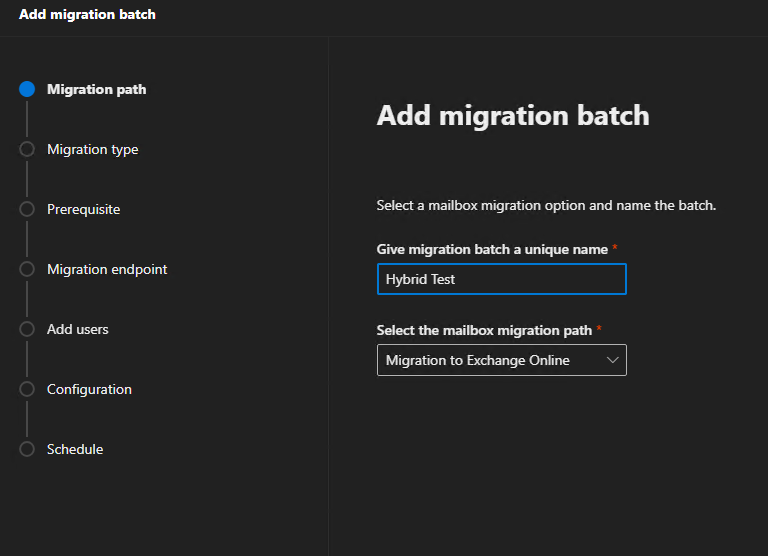

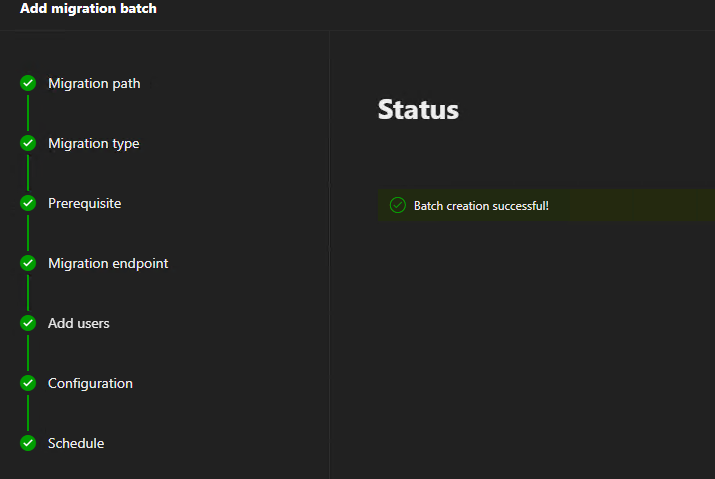

Click to Add Migration Batch and follow the wizard steps:

Name: Hybrid Test

Path: Migration to Exchange Online

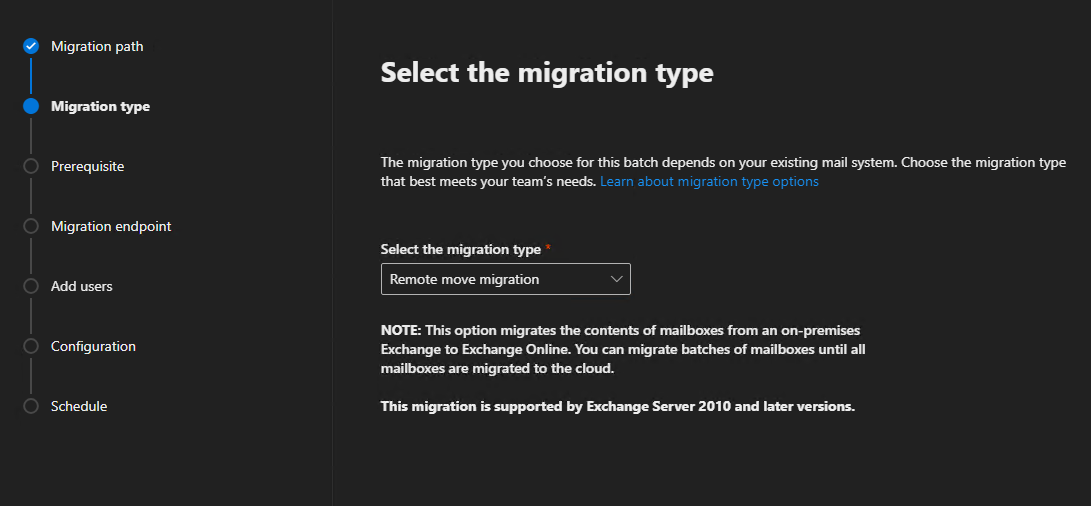

Type: Remote move migration

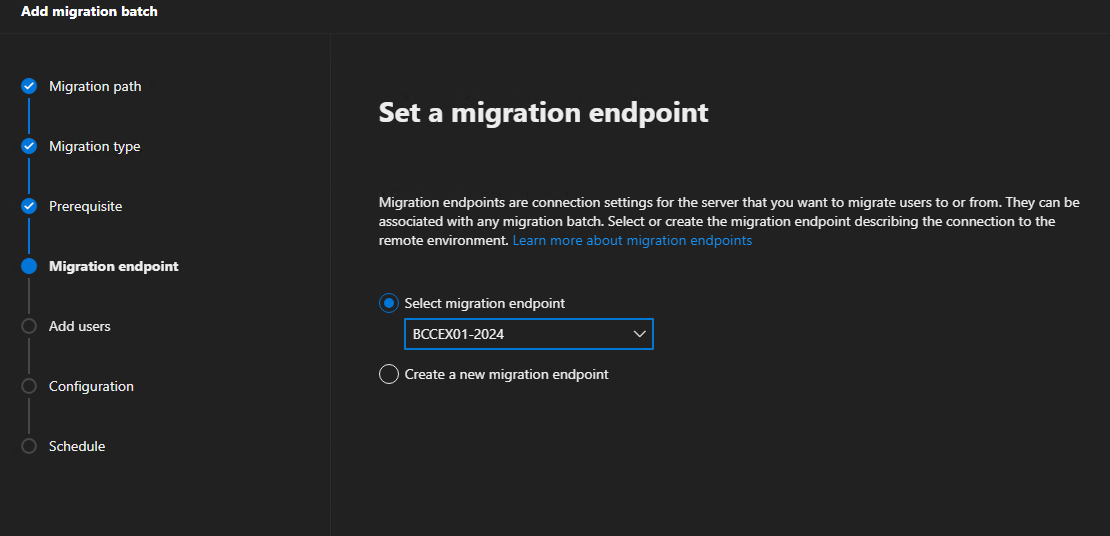

Select or add the on-premise Exchange server as your endpoint

(note: if needing to re-add, please see the appendix at end of this guide on what that looks like, but it should be pretty straight forward)

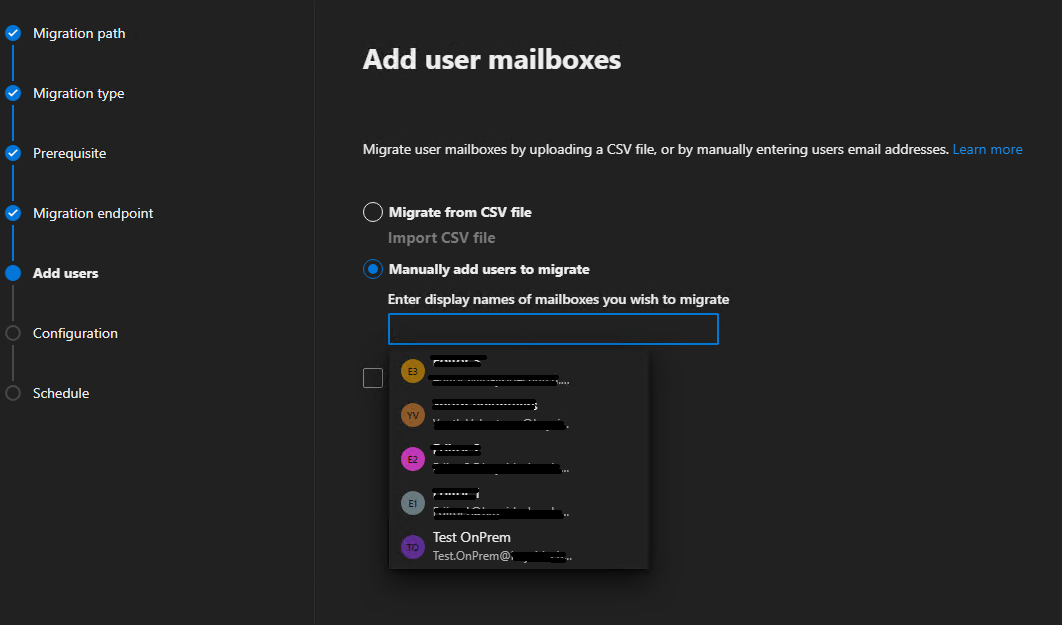

Select to Manually add users to migrate and select the test on-prem mailbox from the list when you click in the text entry field

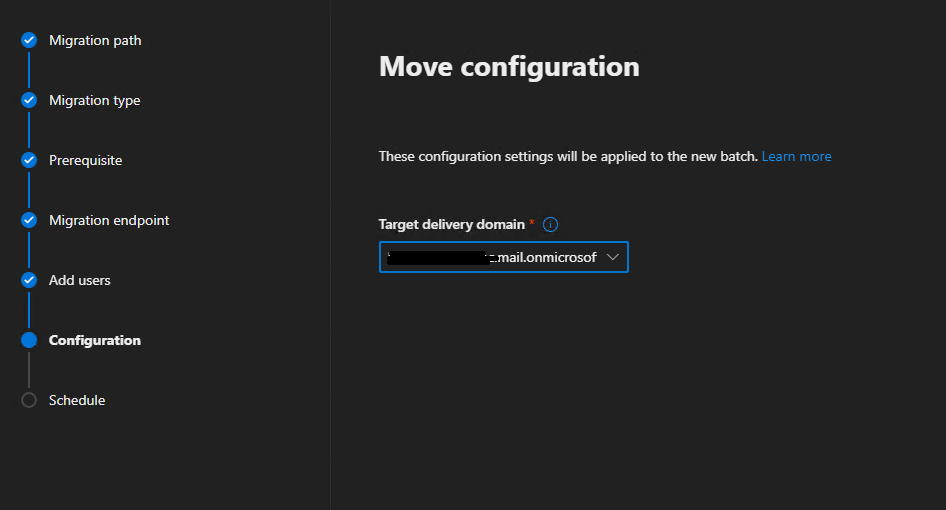

Select the target delivery domain (note: the test account must have this same domain as an alias address in its AD proxyAddresses attribute / as a mail alias)

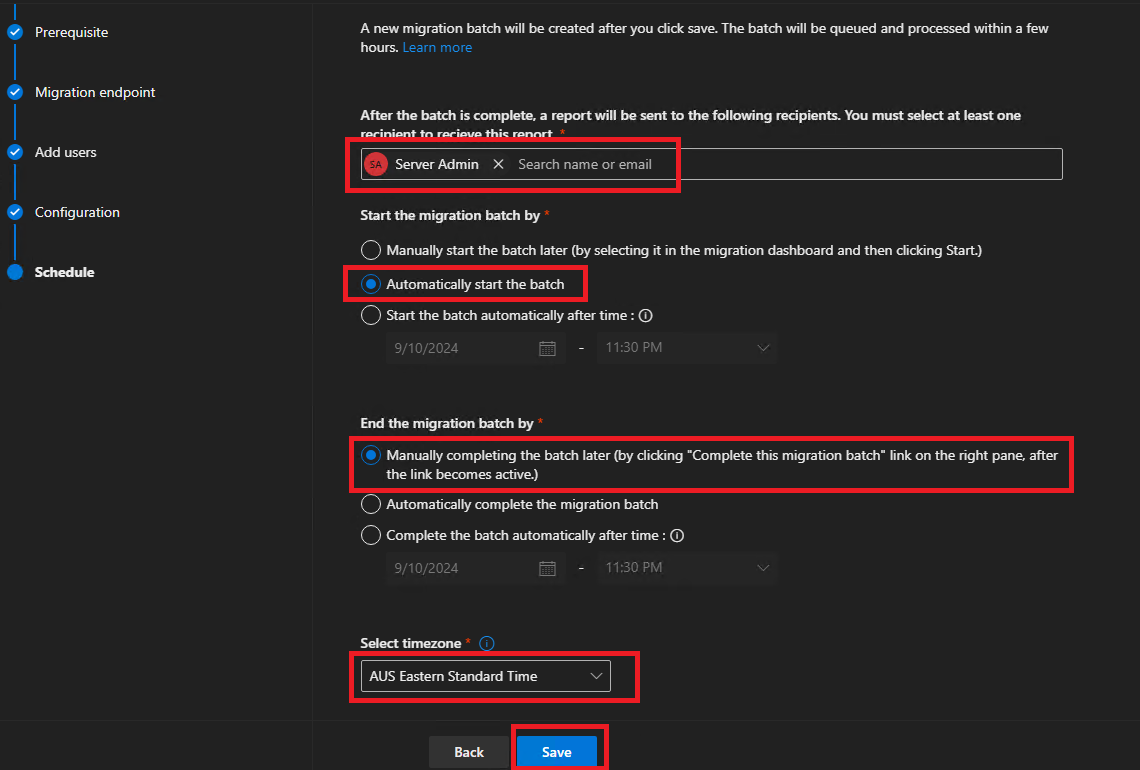

The final screen here will need to be set to:

Auto start the batch

Manually complete the batch (as we don’t actually intend on completing the batch)

Send email to the admin mailbox or a mailbox you have access to if you need to review the alerts

Click Save

Click Done

Now sit pretty and monitor the migration batch

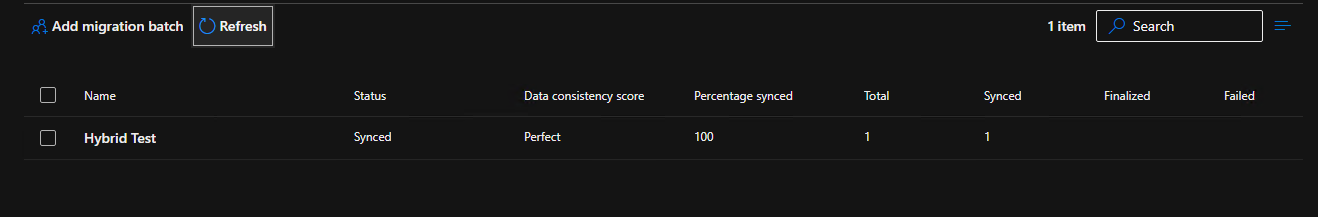

Once the Syncing status has changed to Synced we know its working. A small mailbox should be done within 15 mins. Once its synced, you can simply stop and delete the batch – job done – close ticket!

If there are errors, you’ll need to troubleshoot and fix – we can’t leave it in a broken state as the Hybrid Exchange is used for creating new user accounts.

This is what a synced batch job looks like:

Synced, 100% not finalized and not failed.

Select, stop, wait until stopped, then delete once at this stage.

Appendix:

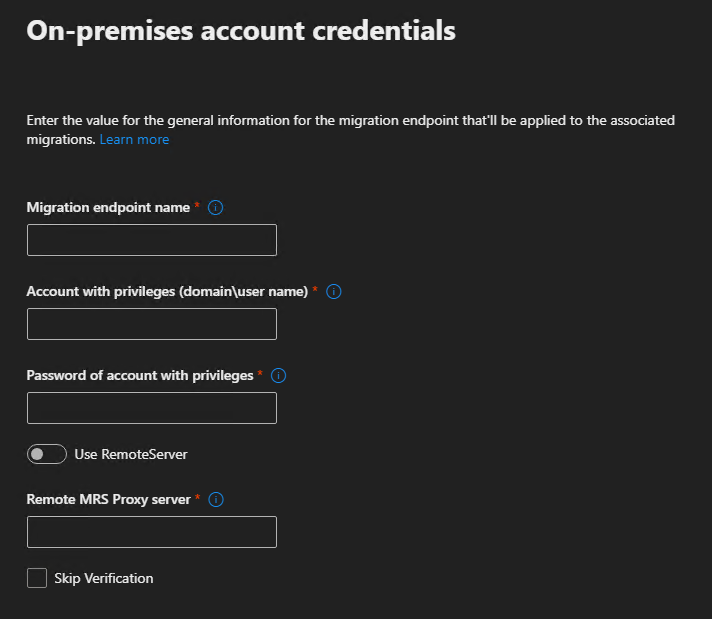

Creating a new Exchange Endpoint:

Give the endpoint a meaningful, short name, ending in the year – if its old, likely we’ll see that and need to recreate it anyway.

The account name ideally will be a domain admin account that also has an on-premise mailbox, but the mailbox isn’t mandatory.

Remote MRS proxy server is the public FQDN of the Exchange server, eg: mail.domain.com

Don’t skip verification – we need confirmation that 365 can communicate with the on-premise server with the specified account

Once validated, continue on with the batch job creation as per above steps.

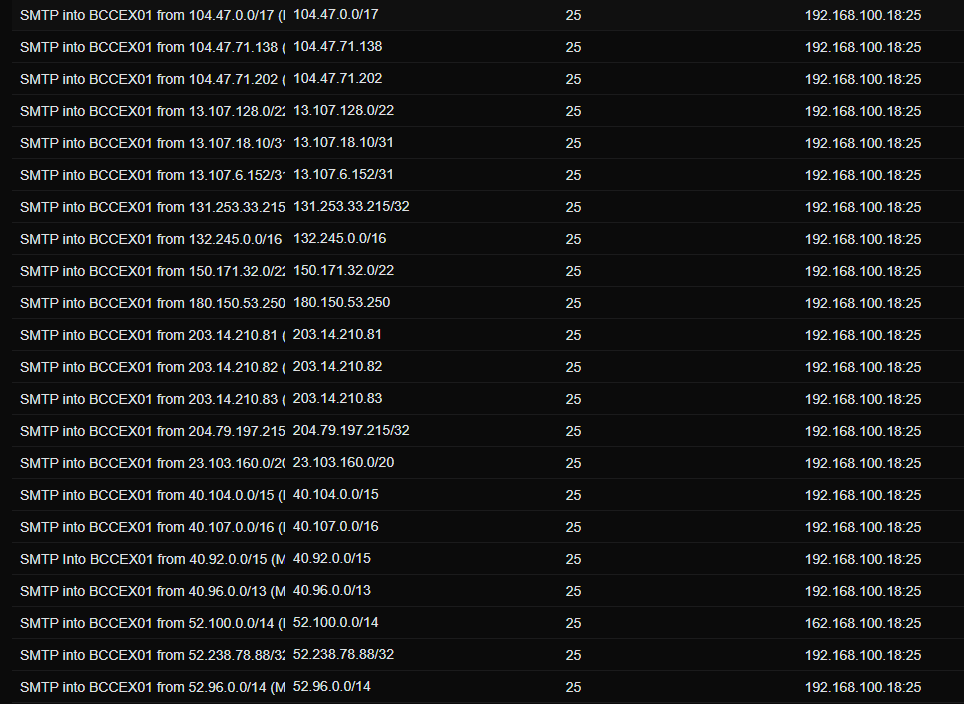

Firewall:

If the on-premise Exchange server isn’t being accessed by general users externally, as all mailboxes are in 365, then its best to restrict access to the Exchange on-prem HTTP, HTTPS and SMTP traffic to only IP addresses that require access (such as us, customer website if using SMTP) and to Microsoft 365.

This is the link for the IP addresses / network addresses that Microsoft has published for creating an ACL / whitelist on the customer firewall: https://learn.microsoft.com/en-us/microsoft-365/enterprise/urls-and-ip-address-ranges?view=o365-worldwide

Below is a sample firewall port forward / ACL for SMTP traffic allow list (using IPv4 IP/Networks):